How to Set Robots.Txt on Blogger and the Code Correctly

crocodileberdiri.blogspot.com - Robot.txt is a file with a txt or text format whose contents are used to set website or blog pages that can be crawled by Google. Robot.txt can allow and disallow web pages to be crawled by google bots on a smartphone or mobile devices and desktops or computers.

The process of crawling website or blog pages can affect the speed or duration of indexing in Google search. So the use of robots.txt has an important role in terms of website SEO and is one of the SEO techniques carried out by bloggers or article content writers, SEO Experts, and Digital. marketing.

How to Setting Robots.txt on Blogspot or Blogger

For those of you who build a website or blog using the Blogspot or blogger platform, then you can add the robots.txt file in the settings or settings located on the Blogspot or blogger dashboard.

Steps to Create a Robots.txt File on Blogger or Blogspot:

- Open your blogger or Blogspot dashboard

- Then open the Settings menu

- Then scroll down until you find Crawlers and Indexing

- After that check Enable Custom Robots.txt

- Then click on custom robots.txt

Then fill it like this:

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: Allow: / Sitemap: https://theblogrix.blogspot.com/sitemap.xml

The robots.txt file above is the safest file to use for SEO, but the robots.txt code above allows all files to be crawled by Google. According to John Mueller, the above code will allow Google to display all ads to visitors who enter all website pages. you. Of course, this will make the crawling process take longer, you can prohibit or block some pages that don't need to be crawled by Google bots such as blog URLs that start with /search/

Examples of URLs that google bots don't need to crawl:

- https://theblogrix.blogspot.com/search/label/Windows

- https://theblogrix.blogspot.com/search/label/SEO

And others

Then you can use a robots.txt file that blocks or disallows pages starting with /search/ in this way:

User-agent: Mediapartners-Google Disallow:/search/ User-agent: * Disallow: Allow: / Sitemap: https://theblogrix.blogspot.com/sitemap.xml

Disallow To Crawl Specific Pages and Posts

If you want to prohibit Googlebot from crawling certain pages of your website, for example, you want to prohibit crawling on one of the articles and blog pages like this:

- https://theblogrix.blogspot.com/2021/10/ubersuggest-tools-cara-research-keyword.html

- https://theblogrix.blogspot.com/p/about-us-buayaberdiriblogspotcom-team.html

Then you can create a robots.txt file like this:

User-agent: Googlebot Disallow:/2021/10/ubersuggest-tools-cara-riset-keyword.html

Disallow:/p/about-us-theblogrix-team.html

User-agent: * Disallow: Allow: / Sitemap: https://theblogrix.blogspot.com/sitemap.xml

Allow Google Bot To Crawl All URLs

If you want to allow all website pages to be crawled by Googlebot, then you can send or create robots.txt below:

User-agent: Googlebot Disallow: User-agent: * Disallow: Allow: / Sitemap: https://theblogrix.blogspot.com/sitemap.xml

Don't Allow To Crawl Images of the Blog

If you want Google Images not to crawl any of the images on the article page that you created, then you can use robots.txt to prohibit or block image URLs on the article page in this way:

User-agent: Googlebot-Image Disallow:/1.bp.blogspot.com/-DHjDVSYMjN4/YWabYL2d2CI/AAAAAAAAJ6A/O08eCpEojE0h7W0oAFYngMh57KZxXvBmACLcBGAsYHQ/w640-h240/Riset%2BSEO%2BKeywords%2BRangking%2Bdi%2BUbersuggest%2BNeil%2BPatel%2B%2528theblogrix.blogspot.com%2529.png/

User-agent: * Disallow: Allow: / Sitemap: https://theblogrix.blogspot.com/sitemap.xml

Allow To Crawl All Images of the Blog

If you want to allow all images to be crawled or crawled by Google Image bots, then you can create a robots.txt file like this:

User-agent: Googlebot-Image Disallow: User-agent: * Disallow: Allow: / Sitemap: https://theblogrix.blogspot.com/sitemap.xml

Doesn't it seem easy to create a robots.txt file on Blogspot or blogger? by creating robots.txt we can manage which pages are allowed to be crawled by google ads, google bots and google image bots.

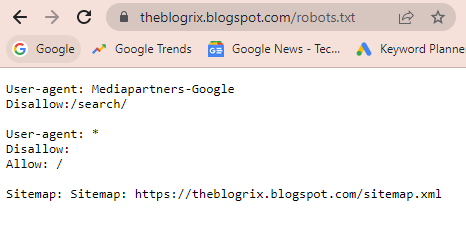

How to Test a Blogspot or Blogger Robots.txt File

Now you can test whether the robots.txt is correct or not and whether an error occurs in the robots.txt file that you created in the blogger settings.

To see or find out whether your robots.txt file is working or not, you can visit the blog domain root on Google like this:

example :

https://theblogrix.blogspot.com/robots.txt

So it will look like below:

Final Words:

This is information about how to write code for the robots.txt file easily and to make our website more SEO and can increase the indexing process of website pages or articles and certain images sooner or later.

List of Posts Articles https://theblogrix.blogspot.com